Week 19: Testing & User Requests

This week we continued with our testing efforts and actioned on more user feedback.

Statistics Page User Requests

This week we decided to work on more user requests we received from the UAT form and the meeting we had with our users.

Analytics

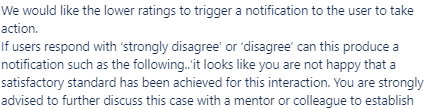

In the meeting we had with the users one of the requests was:

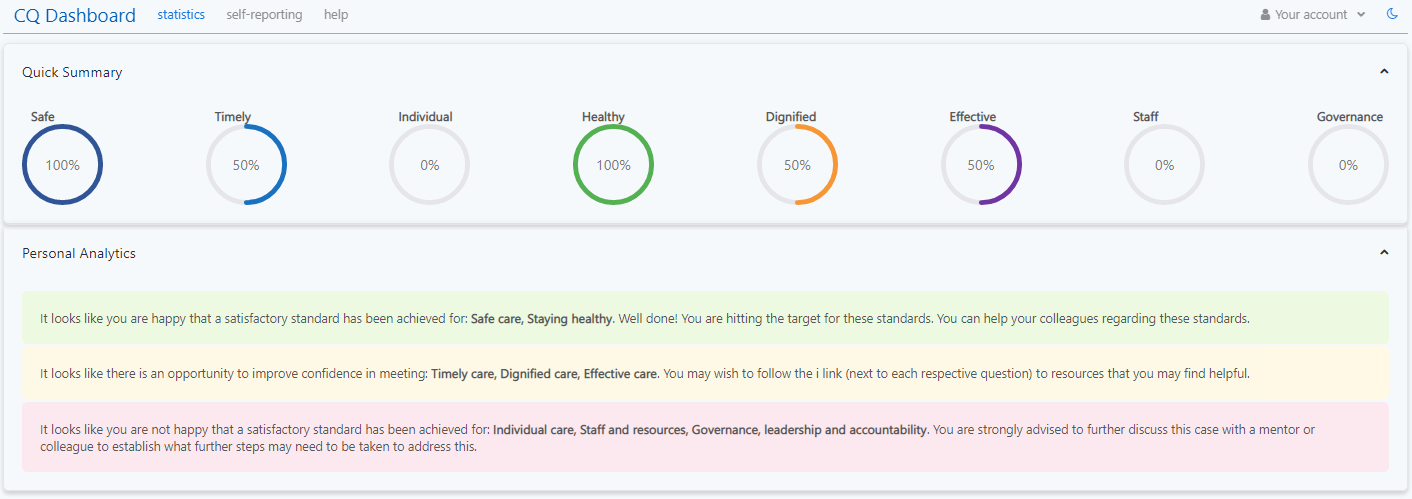

We agreed this would be a great idea to spark conversations, therefore we have implemented a ‘personal analytics’ section on the Statistics page which provides insights on previous self reports.

In this there also pops up a message if you haven’t completed a self report in the last week. To implement this we used the React Suite Panel component to create another accordion, like for the circles.

We then used the React Suite Message component to display the message with their respective colours which correspond to positive, neutral etc.

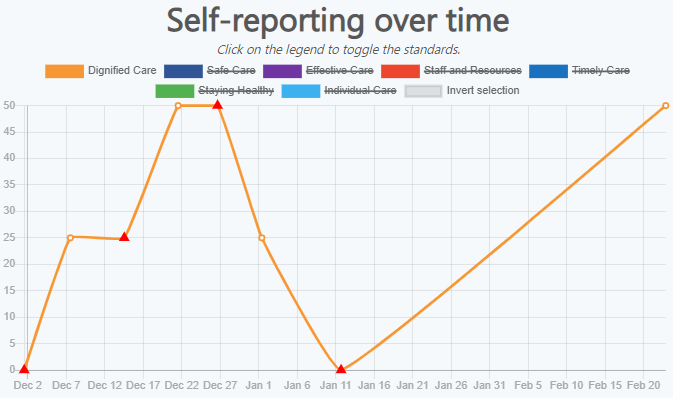

Legend Selection

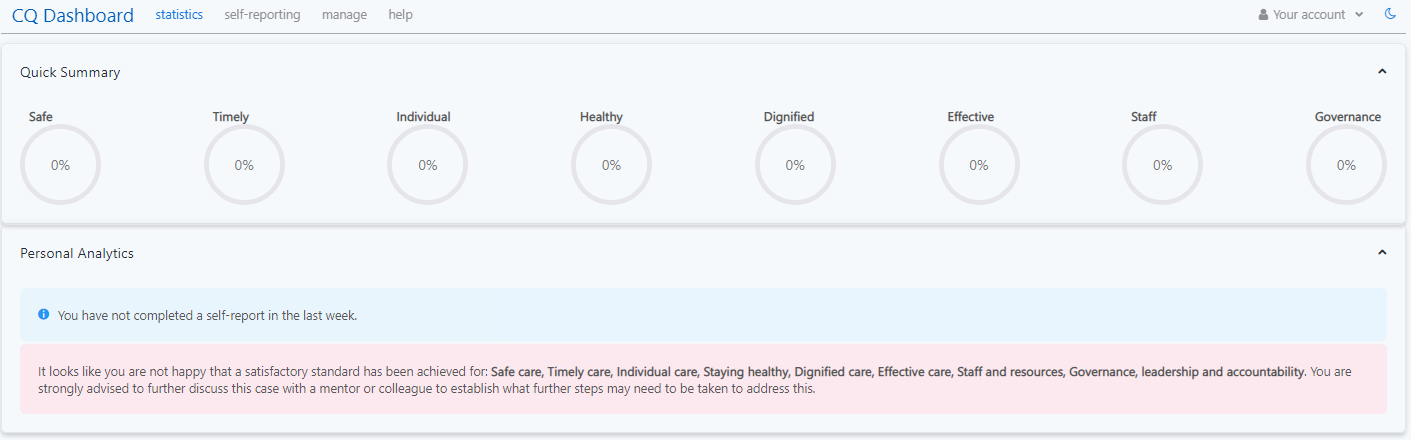

One of the users also mentioned it can be tedious and undesirable to manually toggle all the lines on the graph off if you want to see a single standard/line.

We have now tried to address this by adding an ‘invert selection’ button in the legend meaning you can now turn off all the lines or invert your selection easily.

To implement this we read the docs of our Chart.js component thoroughly and found this useful example. Using the method of overriding the call of a legend click from the example, we managed to add another button which inverts the current selections of the other legends.

An example of how it works is shown below:

Documentation

This week we implemented the documentation for the new components that we did: AnalyticsAccordion and also finished doing the documentation for the statistics page components (Filters, LineChart and WordCloud).

Testing

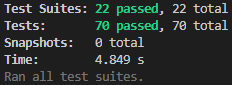

Frontend unit testing

As reported last week we decided to create a frontend unit test test-suite. This week we have finalised this by testing the last few components. In total we ended up with 70 unit tests altogether.

They are all now passing which gives us confidence that our components are functioning how they should.

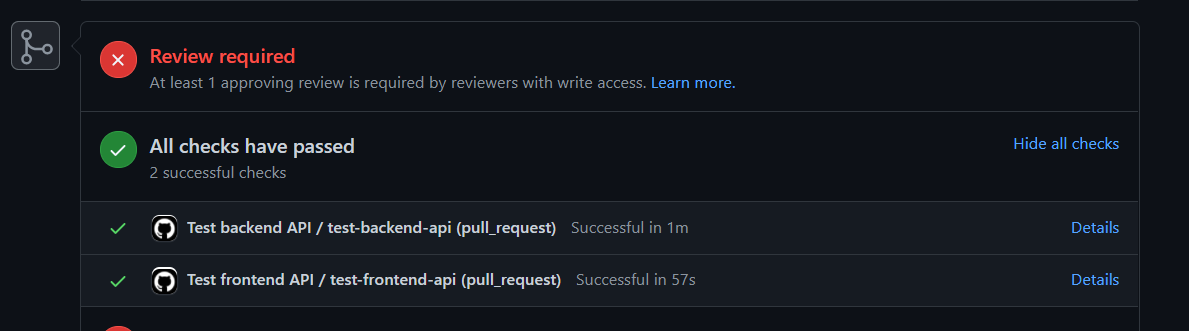

We have also configured GitHub Actions to run this test suite automatically for all Pull Requests:

This, along with our configuration to ensure main is protected and requires all tests to pass, means we will now be able to ensure that no (known) bugs or regressions are committed (and therefore deployed) to main.

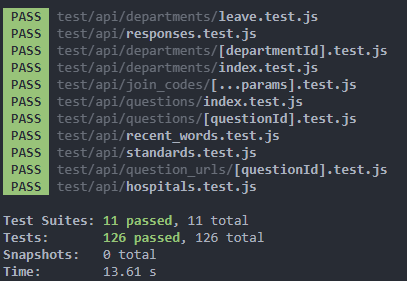

Backend integration testing

Following our research from last week, we wrote an entire test suite for our backend REST API this week. This suite includes over 100 individual tests across all our endpoints, with all tests passing:

As shown in the screenshot earlier, we have also configured GitHub Actions to run this test suite automatically for all Pull Requests, meaning both our backend and frontend are now being continuously tested as part of our Developer Operations (DevOps) workflow.

Testing the backend proved to be fairly difficult, with various issues, questions and decisions being made over the week:

-

After debugging unexpected confusing test failures whilst writing the tests, we discovered that there is a bug in the openapi-response-validator library we are using to check our API responses match our OpenAPI specification.

It turns out that the library does not follow references correctly when used directly in response schemas — this meant e.g. that our assertions were failing because the library thought the expected response was

nullrather than the described schema in our specification.Following this discovery and further research, we found this is a known bug, reported on their GitHub Issues: https://github.com/kogosoftwarellc/open-api/issues/483 which is yet to be fixed.

Due to the lack of time, we were not able to investigate further into the library to e.g. submit a Pull Request to the maintainer to fix the bug.

Instead, we are now using the json-schema-ref-parser library to parse and ‘dereference’ our OpenAPI spec (JSON schema) — this means that the response validator no longer needs to follow references, so our assertions now work!

-

Mocking sessions

A large part of our API is to do with authentication and authorisation — certain user types can only perform certain actions, so there are guards against users performing unwanted/unauthorised actions.

As a result, testing our session and guard logic was crucial for our test suite.

Following research on Jest, we discovered there is a method to mock any call to any method/library/function built-in to Jest, so we are now mocking NextAuth.js’

useSessionandgetSessioncalls to return a fake session object with custom-specified roles for each test case.This means we do not need to interact with Keycloak or its database in our tests, making it easier to reason about and more precise in the test suite’s application.

-

Testing the database

As we were writing integration tests, we needed to test that the integration of our API with our database was working correctly.

This came with the challenge of maintaining a database such that different tests don’t interact with each other negatively, whilst keeping performance and speed at a high level so our test suite runs quickly.

Following research, we decided to create a custom Jest Test Environment with custom

setupandteardownfunctions. Each test suite (test file) is now run in its own Test Environment, which runs thesetupmethod before any tests in the suite (file) run. Oursetupfunction destroys the PostgreSQL schema, and recreates it, followed by populating the schema with our own schema (tables). This is followed by seeding the database with the bare minimum required for the system to work — that is, a few departments, hospitals, health boards, and the questions and standards for them.We defined IDs for specific entities that are used throughout our test suite, so we can now easily query for data in our tests given these known IDs.

We also created a new Docker Compose configuration for this test suite, which creates a single PostgreSQL container with a single database

test— this is what is used in our CI.To aid development, we also added the

testdatabase to our development Docker Compose configuration, so we won’t have to spin up a new container to test our backend locally!

Despite the challenges, we found this to be very beneficial, as we were able to fix bugs spread out across our API (e.g. unexpected response types and unguarded endpoints) whilst developing the test suite — meaning our tests work!

Next steps

Having mostly completed our unit and integration testing, we will now look to our final mode of testing: end-to-end testing of the platform. This will involve using a Headless browser to interact with the web-app programmatically, and asserting that the displayed UI is correct based on the actions performed.

Once the end-to-end testing is complete we will be confident that the platform works as intended before we hand over the code near the end of March.

In parallel to this, we will be working on our final Report Website, along with Deployment Documentation and any remaining User Acceptance Testing.