Week 18: Documentation & Unit testing

This week we completed most of our documentation and began unit/integration testing of the platform.

User Manual Update

Because we updated various features of the platform, we added explanations in the user manual on how to use them. These include how the new word cloud works with enablers and barriers etc.

We are currently using Microsoft Word Online to write the user manual which means that every time that we update (which is frequent) our features, we need to write explanations in Word, then download it as a PDF then upload it to GitHub. This process is very tedious — we are hence thinking of an alternative to have our User Manual on Google Docs, so that the users would directly see any updates to the document in “real time”, and making the process much easier.

Another alternative approach we are considering is using something like Docusaurus so that we can keep all our documentation in GitHub itself.

Documentation

This week we completed the majority of our documentation for frontend and backend.

Frontend

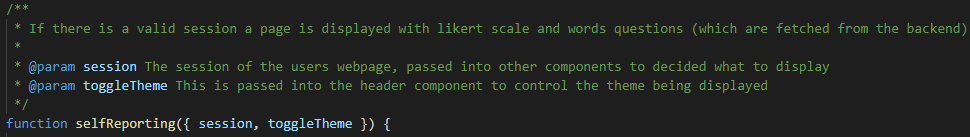

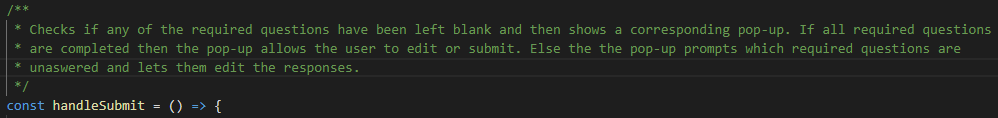

To finish off the frontend part of the documentation there were only the pages in our codebase to do (as we finished the components documentation using Docz last week).

We looked into how you should document React/Next.js pages online however there was nothing specific so we decided to stick with the ‘standard’ of JSDoc. This allowed us to explain each page along with its parameters and also add documentation to any non-trivial functions used.

Some examples are below:

Backend

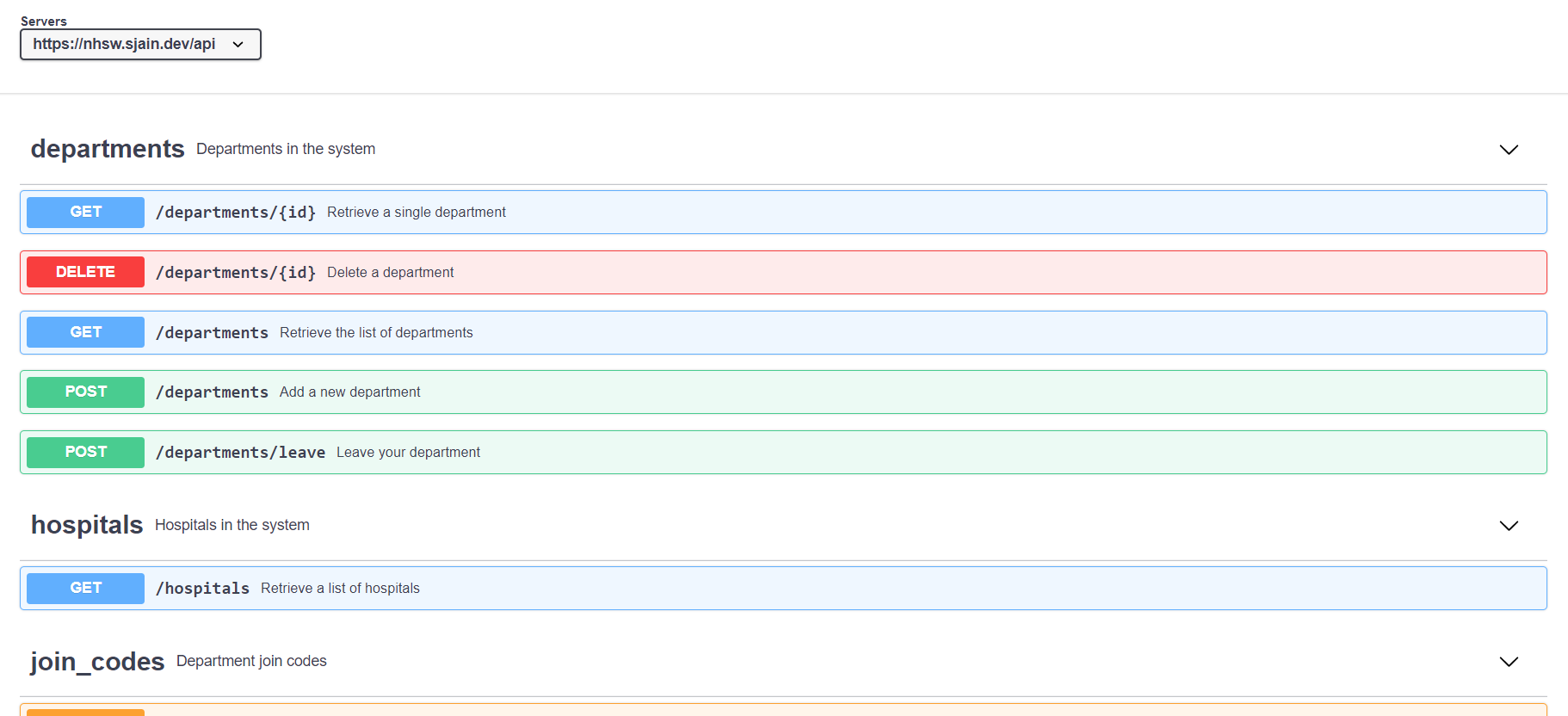

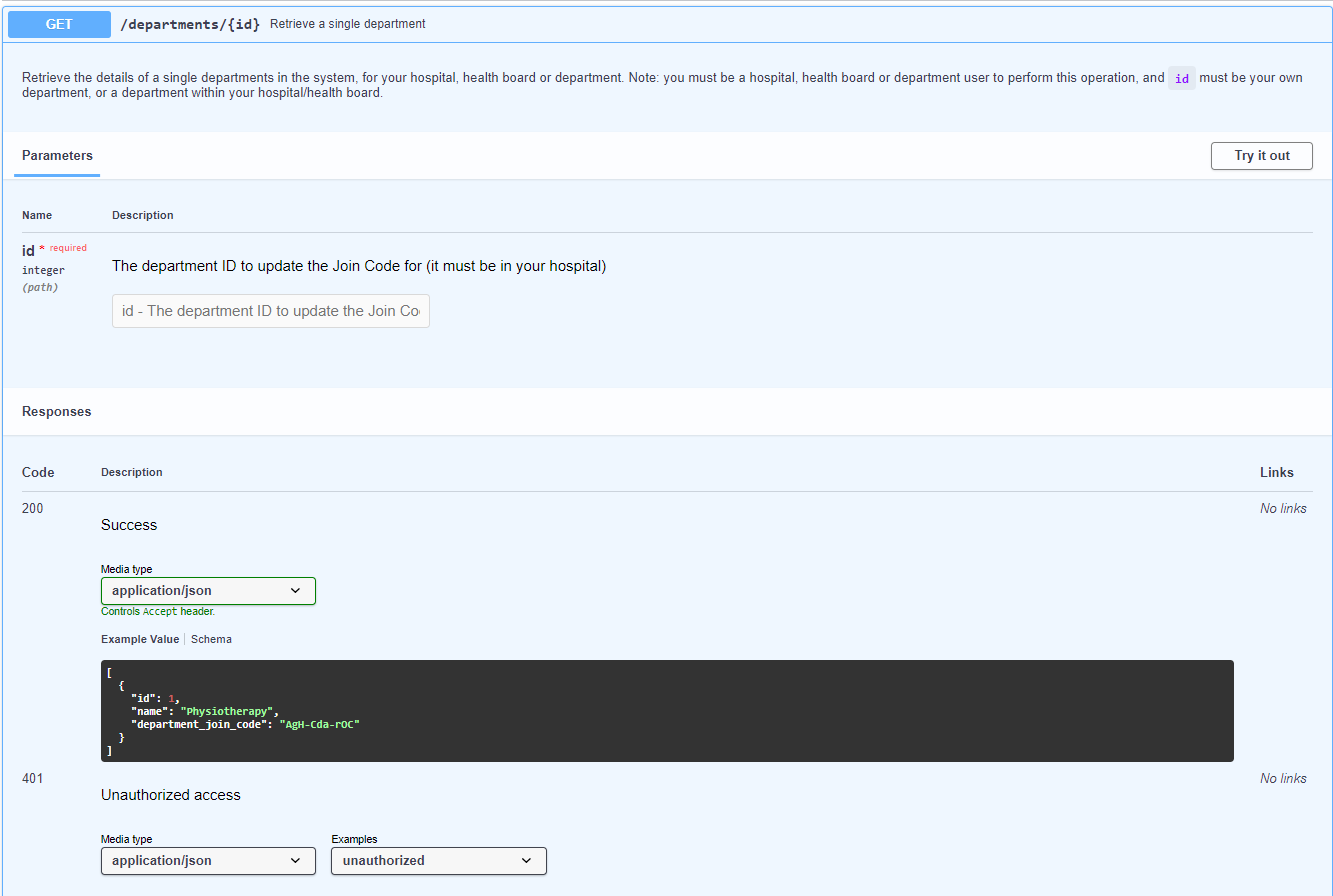

We completed our OpenAPI specification for our REST API this week using [swagger-jsdoc](https://github.com/Surnet/swagger-jsdoc) to add the YAML notation inline in our code, to then compile into a single OpenAPI JSON specification.

Our final specification can be found here.

Our rendered API documentation can be found here. Screenshots of the docs are below:

Testing

This week we started on focusing on testing our platform to make sure we know the platform is fully functional and to spot any hidden bugs to prevent future problems, before we hand over the code.

Frontend

This week we worked on creating a frontend test suite for our platform. After doing some research on how to test React/Next.js projects we learnt that a common method is using Enzyme and Jest.

Enzyme allows us to manipulate/use components in our test suite using methods such as mount (to simulate a component for testing purposes) and simulate (to simulate interactions with components like clicks).

Jest on the other hand is an assertion library and test runner. It is an open source JavaScript testing framework which is very well documented and has a wide range of APIs which gives us great flexibility to test in a variety of different ways. Another advantage of Jest is that tests are isolated and can be parallelized which allowed us to create a huge test suite that can be run in seconds.

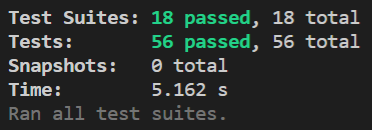

Currently we have created unit tests for most of our components with 56 tests altogether and no test failures:

Backend

We also began working on the backend test suite this week too. This required research into best practices and what type of testing is preferred for REST (CRUD) APIs.

Following research we have decided against unit-testing the API, as it would be very complex to isolate the logic of the APIs from the network handling and database interactions. Moreover, we would definitely like to test the database interaction aspect of our API, so isolating it would make less sense for this platform.

As a result, we will be performing integration testing on our REST API to test the Next.js route handling through to the database interactions.

We’re still in the process of determining the best method of performing these tests, however it is likely we will use Jest (as with the frontend) as our test runner and assertion library.

One difficulty we are thinking of at the moment is how to create sessions dynamically. It is likely we will end up creating a helper method to send a login request to get a session token that we will use in our tests to authenticate the requests.

We also discovered the [jest-openapi](https://github.com/openapi-library/OpenAPIValidators/tree/master/packages/jest-openapi#readme) open-source matcher that allows us to assert our HTTP responses match our OpenAPI specification. This has many benefits, with one being that we can use our OpenAPI specification in part for testing too, so we don’t have to write code again to check for response structure when we already have it in our OpenAPI specification.

We also looked into Jest Test Environments which seem useful for our backend tests — we will probably use this to perform different test setups and teardowns for frontend vs backend tests, so we don’t perform unnecessary setup actions for each test suite.

Finally, our current thoughts for the database aspects of backend testing are that we will create a new PostgreSQL schema with a random name for each test with freshly-seeded data which can be modified without affecting the other tests.

We hope to have written these tests by next week, after more research on the best API testing technique.

Development: bug fix

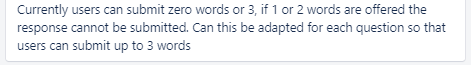

Our user acceptance is ongoing and this week our users found a small bug on the self-reporting page. As described below users could only enter 3 or 0 words and nothing in between.

We debugged this locally and found that when 1 or 2 words were entered, the toLowercase() method we use, so that all words are stored in lower case, was being called on the empty input boxes too so a function was being called on null and that’s where the error came from. To fix this we guarded sending inputted words with a check if they’re not null and this fixed the problem. Users can now enter 0 to 3 words inclusive.

Next steps

We will now continue on our testing efforts and work on some user requests in parallel.

Our aim is to finish all development, documentation, and testing within the next 2 weeks.